Written by Alice Njoki.

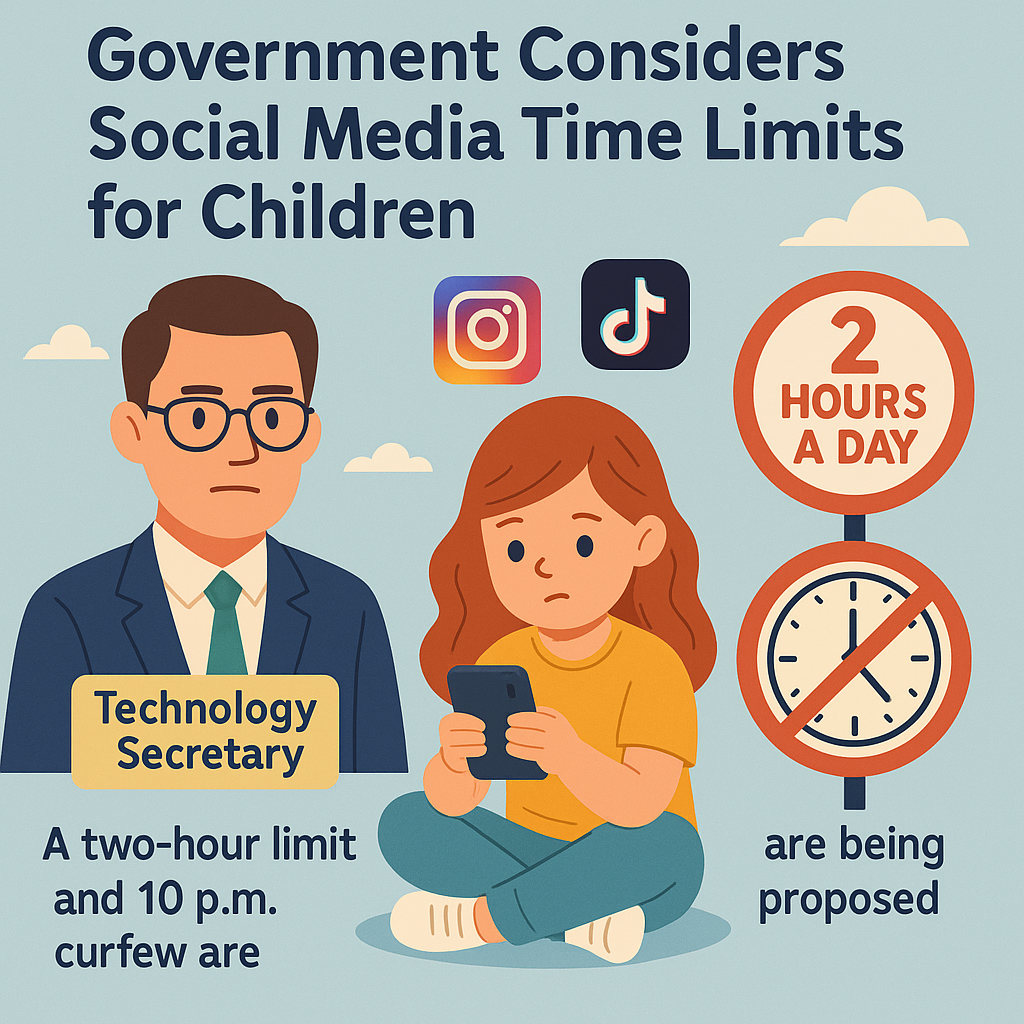

The UK government is exploring new rules to limit how long children can use social media each day. Proposals include a two-hour daily cap on individual social media apps and a curfew at 10 PM to protect young users from excessive screen time and harmful content.

Technology Secretary Peter Kyle highlighted concerns about the addictive nature of some apps and smartphones and is reviewing what a healthy online life for children should look like. However, he said the government cannot publicly discuss detailed plans yet because the Online Safety Act passed in 2023 has not been fully enacted.

Campaigners criticize the government for delaying stronger laws. Ian Russell, whose 14-year-old daughter died after exposure to harmful online content, urged for more effective legislation rather than temporary fixes. He said weak regulation and tech companies prioritizing engagement over safety have led to tragic consequences.

Currently, some parental controls and app features exist, such as TikTok’s default 60-minute screen time limit for under-18s and Instagram’s optional usage limits, but uptake is low and controls are often confusing for parents. Recognizing this growing digital divide between children and their caregivers, Mtoto News is taking a proactive step by launching the eAware Parenting Webinar on 13/6/2025 from 7:00pm – 8:30pm which will be happening every Friday in five weeks starting this coming Friday .

This innovation is a virtual program designed to empower parents with the digital literacy, safety tools, and communication skills they need to confidently engage with their children’s online worlds. By fostering a deeper understanding and shared digital experiences, the webinar aims to bridge the gap, helping parents move beyond restrictive controls toward supportive, informed guidance that strengthens family connections in today’s fast-evolving digital landscape.

England’s children’s commissioner, Dame Rachel de Souza, called for bolder government action, emphasizing that children should not have to police their own online safety and that harmful content should be prevented regardless of time spent online.

The UK’s Ofcom regulator is also enforcing new rules from July 2025 requiring tech firms to protect children from harmful content, with penalties for non-compliance. These measures include age checks, safer social feeds, and protections against bullying and dangerous challenges.

Similar efforts are underway internationally. For example, Australia has introduced a mandatory minimum age of 16 for certain social media accounts, and some US states have enacted laws requiring parental consent and privacy protections for teen social media users.

While limiting screen time is a step forward, experts argue that simply capping hours does not address the root causes of online harm. The focus must be on improving content moderation, reducing exposure to harmful material, and changing business models that prioritize user engagement at the expense of safety.

Parents and policymakers need clear, consistent tools and stronger regulations to create safer digital environments. Open conversations between parents and children about online risks remain essential, but government and tech companies must take greater responsibility to protect young users.